Adventures in End-to-End Testing with Cypress

June 3, 2019

Published by Daniel Smith

Cypress E2E Testing

As part of our migration from Angular to React we decided to replace our current e2e testing suite, opting to move to use Cypress. In this post, we'll go over why we chose Cypress and the changes and tweaks we made to make it fit our current workflow.

Why Cypress?

Before we made the jump to Cypress, we were using Protractor to run our tests. Protractor made a lot of sense for us at the time, being designed specifically for Angular. As with most browser testing frameworks at the time, it was running on Selenium, which most of you reading this will be familiar with, so you don't need me to tell you how slow and flaky it can be sometimes (not always its fault, to be fair).

We were also using cucumber to make the tests easier to collaborate on cross-discipline. Whilst this sounded pretty cool at the time, we found that it actually made writing tests slower, since we had to wire up any missing steps in code once we'd written the test, then go back and check it worked. It also made finding out why a test had failed more challenging than we'd expected and our steps were typically too specific to be reused in other tests.

The end result was a set of tests that gave us false positives (eroding our confidence in them) and nobody wanted to work with. We also had a separate set of tests being maintained in GhostInspector, an online tool a couple of the QAs had tried out previously and were still running. Since we were rewriting chunks of the site in React, we thought it would be a better use of our time to start again with a single source of tests written in a consistent format.

Whilst looking into alternatives, I stumbled upon Cypress in a blog post. The main draw for us was the simple API and the nice test runner interface, which made it easy for everyone to understand what was happening in the test (and step back to see the state, which still looks cool). Back then it was still in beta, but after spiking out some tests and demoing it to the team, we decided to give it a shot. For more of an idea of what Cypress can do, check out their Features list.

Getting up and running

Rather than go over the basics of how to install and run Cypress, I'll point you to their Getting Started guide, which does a pretty good job of helping you write your first test. I'd also suggest reading most of the guide since they explain a lot in good detail. What I want to focus on for this post is how we tackled the specific challenges we faced along the way and the decisions we made to make the tests fit our current workflow.

Seed data

One of the main drawbacks of our previous test suites was that we had to rely on our seed data being in a very specific state for our tests to run successfully. For example, if we ran the test to raise an absence several times in a row, we would suddenly get a failure because the absence the current run created was off-screen. This resulted in us wiping the database before each run, with a SQL script to generate a set of users in the exact required state. Not only was it fiddly to update a user if our tests needed to evolve with our changes, but it also meant we were potentially hiding performance issues with larger data sets.

Luckily, Cypress gives you a couple of ways to create seed data per test run. Their Testing your app guide outlines a couple of ways you could do this:

cy.exec()- to run system commandscy.task()- to run code in Node.js via the pluginsFilecy.request()- to make HTTP requests

We decided to go with making HTTP requests directly to the API in question, since we didn't want to have to manually maintain the database and it meant the endpoints for creating companies and users would be tested as part of the flow, so if we break something in one of those endpoints, we'll know straight away (this has already paid off more than once).

To do this, we simply import a script in support/index/js and define a before block to configure our employees:

before("Create seed data", () => {

applyFeatures()

.then(() => registerCompany())

.then(() => {

skipOnboarding();

getDefaultWtp();

getEmployeeDefaults();

})

.then(() => {

addEmployee("admin");

addEmployee("manager");

addEmployee("employee");

addVariableEmployee("variableEmployee");

})

.then(() => {

setAdmin("admin");

setManager("manager", ["employee", "variableEmployee"]);

});

});

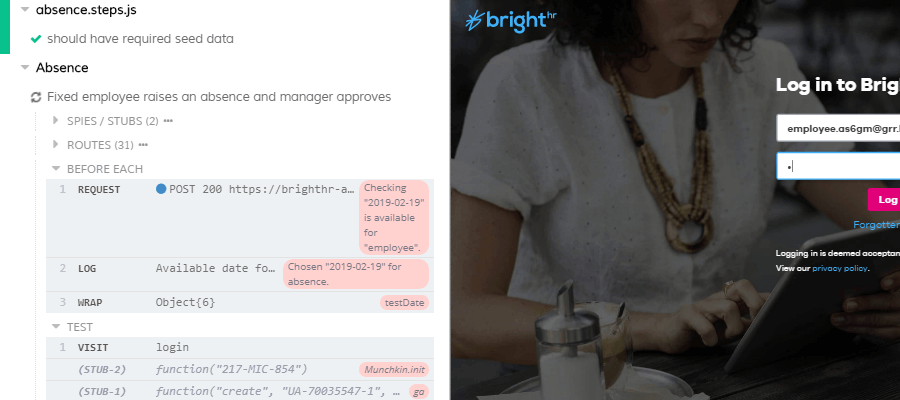

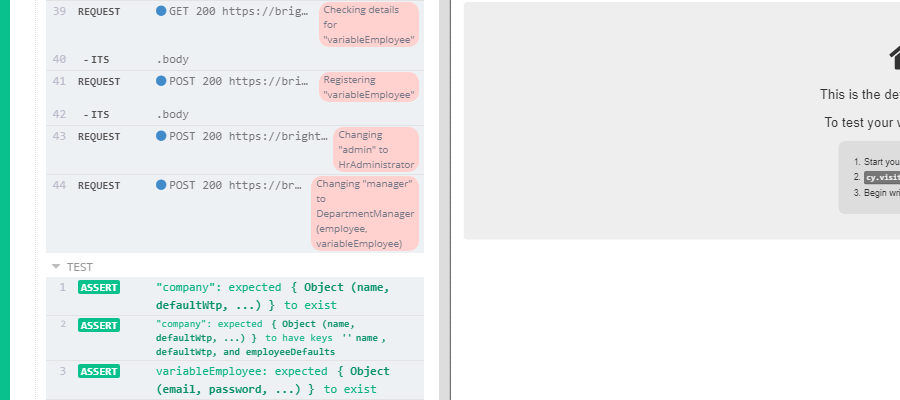

Each of these steps sends off a request to the API with randomly-generated data (emails, passwords, etc) and, where required, stores that information in Cypress.env to be accessed by other tests. In the same file, we specify a test to assert the seed data has been created. Here's a trimmed-down example:

describe(Cypress.spec.name, () => {

it("should have required seed data", () => {

[

"variableEmployee",

"employee",

"manager",

"admin",

"pointOfContact",

].forEach((username) => {

expect(Cypress.env(env[username]), username).to.exist.and.have.all.keys([

"email",

"firstName",

"lastName",

"password",

"userId",

]);

});

});

});

Note: We keep the test separate from creating the data because if someone is developing with it.only it will still run, whereas if it's part of the test it will be skipped.

We also label every API call with the as function so we get a nice, readable output in the test runner:

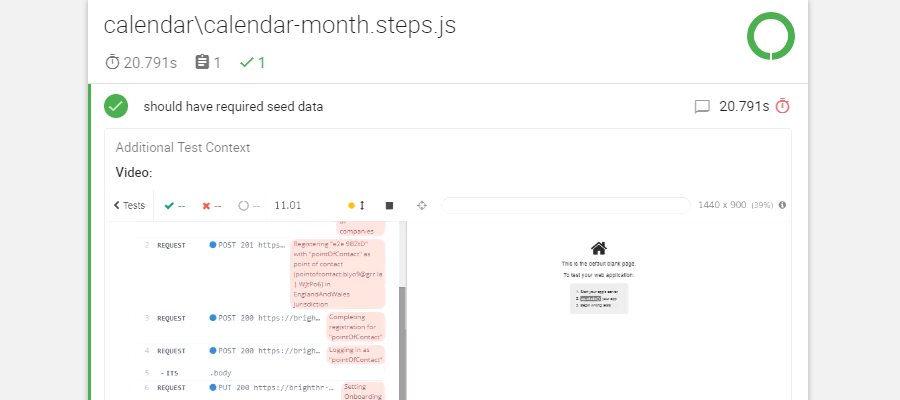

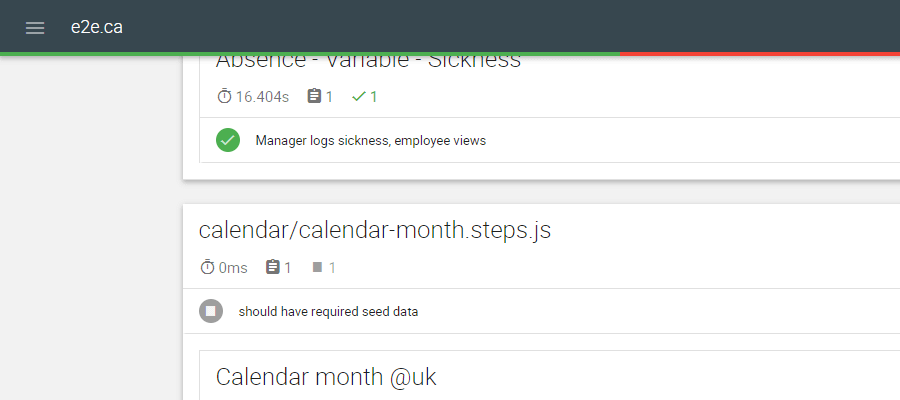

Reporting

Cypress has a pretty nice-looking Dashboard service that collates your reports into a single dashboard. We decided to use Mocahawesome to generate a snazzy HTML report, which could then be surfaced as a tab in our TeamCity builds, plus it gave us more control over the structure of the report itself. When we were starting, Cypress 2.1.0 and lower made this very straightforward to do, specifying Mochawesome as our reporter in cypress.json and configuring it as per its readme. When 3.0 came around (just before we were about to start using it in earnest), they added parallelisation, which broke our reporting; instead of a report with every spec file, we received a report for the last spec file only. After looking into it, I realised that it was running the report for each spec file, overwriting the previous file. Taking cues from that issue thread, I wrote a simple node script, which would, after tweaking cypress.json to not overwrite files:

- Run the Cypress tests

- Merge the JSON files for each Mochawesome file

- Restructure the output so that our seed data test was the parent test for any other tests in the spec file

- Append any screenshots to the correct tests

- Append the video for the spec file to the parent test

- Clean up the old JSON files

Since I created the script, mochawesome-merge has been created, which tackles 1 and 2 on the list above, so I'd recommend most people use that and leverage Mochawesome's addContext to add screenshots. If you'd like to see how we've done ours though, I've created a Gist with our script, ci.js.

Multiple environments

We wanted to be able to run our test suite on a number of different environments, but passing a bunch of arguments in the command line felt clunky and required a good memory to remember all of the parameters and URLs (which I for one certainly don't possess). Cypress already cover one approach to doing this in their Configuration API

guide, which basically tells Cypress to override any settings in your main config with those found in the specified config file. In order to make it super simple to run, we added a couple of npm scripts to package.json:

"scripts": {

"cypress:open": "cypress open",

"cypress:run": "node ci.js", // script outlined in Reporting, takes same arguments

"clean-reports": "rimraf cypress/report/*",

"test": "run-s clean-reports \"cypress:run -- --env configFile={1:=e2e.uk},tags={2:=@smoke}\" --",

"test:ci": "run-s clean-reports \"cypress:run -- --reporter=mocha-multi-reporters --env configFile={1:=e2e.uk},tags={2:=@smoke}\" --",

"start": "run-s \"cypress:open -- --env configFile={1:=e2e.uk},tags={2:=@smoke}\" --",

"help": "node help.js",

}

We're using npm-run-all to accept arguments to our scripts, which lets us simply run a command like npm start local.uk or npm run test:ci e2e.ca and Cypress will load the correct config file with any other required parameters. I also created a help script that would list the available configuration files.

Tagging

With multiple environments possible, the next step was making it so not every test run on every environment. Cypress currently doesn't support Mocha's grep feature for tagging tests, but it might in the future and after implementing our solution I was made aware of the cypress-select-tests plugin, which I'd recommend for most cases.

Since we specify the region in our config file so our code can make a decision based on that. We originally started with wrapping our tests with if blocks, but it was messy and it made the tests harder to read, so I ended up using cucumber-tag-expressions to leverage Cucumber's tagging syntax with the relevant region tags already specified.

import { TagExpressionParser } from "cucumber-tag-expressions";

const region = Cypress.env("region");

const otherRegions = Cypress.env("supportedRegions")

.filter((r) => r !== `@${region}`)

.join(" or ");

const tags = Cypress.env("tags");

const tagger = new TagExpressionParser().parse(

`not (@ignore or ${otherRegions})${

tags == null || tags === "" ? "" : ` and (${tags})`

}`

);

before(function () {

const suites = this.test.parent.suites.slice(

1,

this.test.parent.suites.length

);

suites.forEach(checkSuite);

});

const shouldSkip = (test) => !tagger.evaluate(test.fullTitle().match(/@\S+/g));

const checkSuite = (suite) => {

if (suite.pending) return;

if (shouldSkip(suite)) {

suite.pending = true;

return;

}

(suite.tests || []).forEach((test) => {

if (shouldSkip(test)) test.pending = true;

});

(suite.suites || []).forEach(checkSuite);

};

The above is imported into support/index.js and runs before each suite kicks off, skipping tests if they contain a tag that doesn't match the specified region. We also updated our seed data test to skip if all of the other tests have been marked as skipped. Combined with the package scripts, this means we can run npm run e2e.uk @smoke in our CI environments and something like npm run local.ie "@absence and @manager" to run a larger regression suite for a particular area of code, based on our tags.

Minor issues

We also had a couple of minor issues that I wanted to mention here. Versions of Cypress prior to 3.1.4 would have issues if a page had incomplete XHR requests when the test ended, which would cause the test to sometimes fail after a success. Whilst this is no longer relevant, I thought the workaround was still interesting and possibly useful for intercepting other errors if required, so here's what we were doing within our support/index.js:

Cypress.on("uncaught:exception", hackToNotFailOnCancelledXHR);

Cypress.on("fail", hackToNotFailOnCancelledXHR);

function hackToNotFailOnCancelledXHR(err) {

const realError =

Cypress._.get(err, "message", "").indexOf(

"Cannot set property 'aborted' of undefined"

) === -1;

if (realError) throw err;

else {

console.warn(err);

return false;

}

}

The other minor issue some of us had was running the tests from the GUI with Chrome. Running them with Electron (which is what is used by default from the command line and in our CI environment) was fine, but in Chrome the site would burst out of the test runner and break everything (both on Windows 10 and OS X). 3.1.5 fixed this on my home machine, but not my work one, but it's a minor inconvenience at most.

What's next?

We're pretty happy with the current state of our Cypress tests, at least in terms of technical implementation; they are faster and more stable than our old suites, false positives are way down (after some bumps in the road) and we have a good base to build on. We've also caught things before they went any further in the pipeline, which is always a win.

We're currently updating the tests to support our new single sign-on login process, but I think our next big challenge will be the structure and nature of the tests themselves, rather than technical implementation details. We need to ensure the tests are useful and cover as much ground as practical, without duplicating other tests or adding thousands of the things, sticking mostly to the happy paths (all hurdles we've face-planted over in the past). With that in mind, we need to take a look at our current tests and evaluate which ones work, which ones need improvement and any gaps in our coverage. With both Cypress and our move to using Jest with our React components, I think we'll get there.

_[e2e]: End to End _[CI]: Continuous Integration

Registered Office: Bright HR Limited, The Peninsula, Victoria Place, Manchester, M4 4FB. Registered in England and Wales No: 9283467. Tel: 0844 892 3928. I Copyright © 2024 BrightHR